CSAM – Scanning of end-to-end encrypted conversations and for unknown content and grooming is particularly problematic

First and foremost, it is essential to understand that the proposal for a regulation laying down rules to prevent and combat child sexual abuse (CSAM / Chat Control) from the Commission, rather than aiding our fight against abuse, may inadvertently make it more challenging. Specifically, the scanning of end-to-end encrypted conversations and the pursuit of unknown content and grooming will prove ineffective in catching abusers.

The Commission is acting like they can wave a magic “digital” wand and solve child abuse, but their proposal actually risks making it harder to catch abusers while endangering everyone’s privacy.

The truth is that there is no “easy” solution to child abuse: if we actually want to fight child abuse and protect children, then we need to:

- train teachers and social workers to better recognise patterns of abuse,

- and give them the financial and human resources to better oversee vulnerable children.

My concerns are explained in detail in my white paper, as well as in European Parliament complementary impact assessment, highlighting the problems regarding efficacy of the proposal as well as issues on privacy.

My view and the analyses by the European Parliamentary Research Service (EPRS) join a chorus of worried voices about the proposal. These voices range from the European Data Protection Board, who have called the proposal “illegal”, to the French senate, as well as the Irish and Austrian parliaments, and now even the Council’s legal service, who have all expressed serious reservations about legality and efficacy.

Below I will show why the scanning of end-to-end encrypted conversations and the scanning for unknown content and grooming is particularly problematic.

Scanning of end-to-end encrypted conversations in general

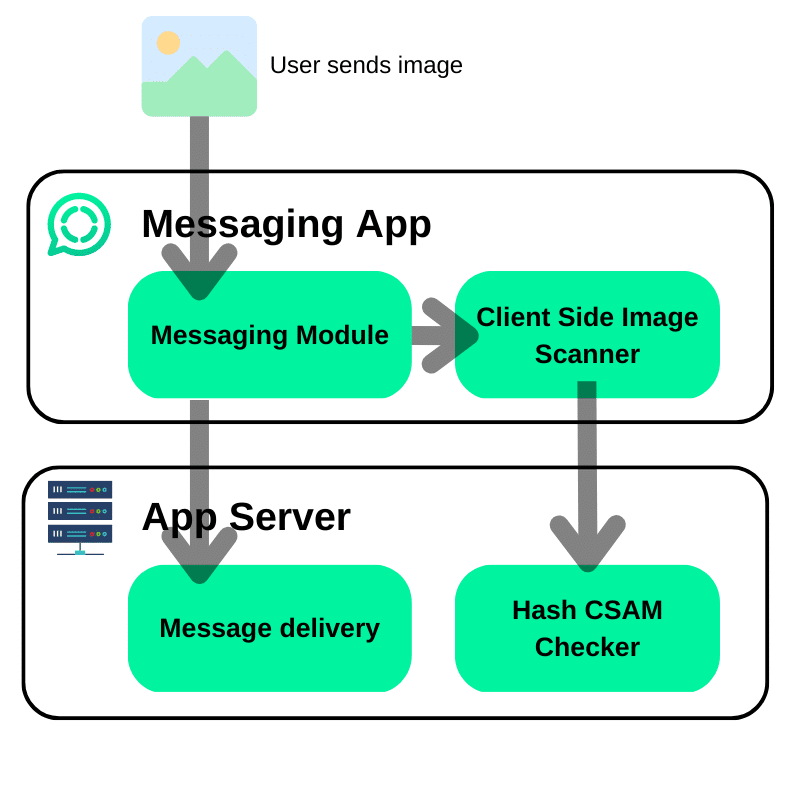

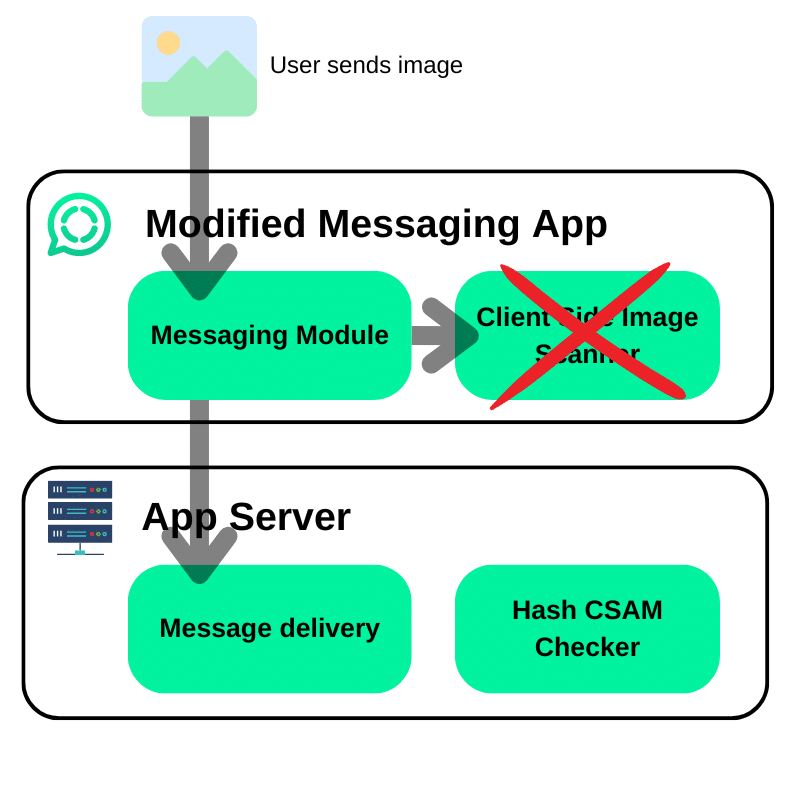

Client-side scanning would affect all innocent citizens, but abusers and cybercriminals would quickly circumvent it. In end-to-end encrypted chat services, scanning would have to be done by the chat app on the abusers’ phone: so-called client-side scanning. The problem with that is that abusers and organised criminal networks supporting them will just modify the app to disable or break the scanning. And modified versions of the app would spread quickly.

This is not science fiction, modifications of chat apps are already popular, for example there are modified versions of WhatsApp which can bypass restrictions put in place by META.

Scanning for unknown content and grooming

Unlike scanning for known content, scanning for unknown content and grooming essentially involves using AI to read citizens’ conversations and look at their photos in search for grooming or abuse material. Notwithstanding the obvious privacy issues, these methods will be dangerously unreliable, because AI cannot understand intent.

For instance, scanning conversations for unknown CSAM could flag intimate images sent between teenage couples as CSAM, as AI cannot tell the difference between consensually shared images and abuse material.

This is no minor issue: in the EU about 1/3 of teenagers under the age of 18 sent intimate images to their partners in the last year: this means that in the EU alone, teenagers under 18 sent at least 24 million intimate images. This could result in tens of millions of false positives a year, giving EU Centre or local law enforcement even less time to fight real abuse.

When it comes to grooming detection, even proponents of the CSAM regulation recognise that is not reliable enough, and could cause numerous false positives or negatives.

The regulation could also be cited as an excuse to use similar technology to enforce anti-LGBTQI+ education laws in countries where education on LGBTQI+ rights is regularly labeled as “grooming” and even in more liberal countries, false positives would risk ousting LGBTQI+ children in hostile families.

Do not ignore technical and legal advice – child abuse must be stopped!

We need to look at how and when we use technology to prevent child abuse. Rather than grooming detection to flag and report, we should use technology to warn kids about suspicious messages, so they can identify and move away from danger. Rather than stigmatising teens by calling the police on them, for sharing nudes, we should use nudity detection to help teens make informed decisions while also providing tools to help them when things go wrong.

This regulation should let kids explore independently while learning about risks in an environment built to protect them that offers agency, privacy, and safety.

Our wish to “do something” should not push us to do the wrong thing! We must not ignore the critical voices

My concerns and from others in the European join a chorus of worried voices. Listen to them, because:

If we pass legislation that later gets struck down by the European Court of Justice as illegal…

… we won’t be helping kids.

If we pass legislation that mandates easily-bypassed scanning…

… we won’t be helping kids.

If we pass legislation that floods law enforcement with false positives…

… we won’t be helping kids.

We must change this proposed legislation so that it really can help kids!

Because we cannot accept criminal networks or friends and familiy of vulnerable children to abuse them with impunity.

Photo by Miquel Parera on Unsplash